| Introduction

Paramter 를 특정 데이터에 맞춰서 최적화

강한 Hypothesis 를 만든다. Hypothesis 안에는 Parameter가 존재하고, 결국 데이터로 이 Parameter를 최적화하는 과정

| Naive Bayes & Logistic Regression 비교

왜 비교해보나요?

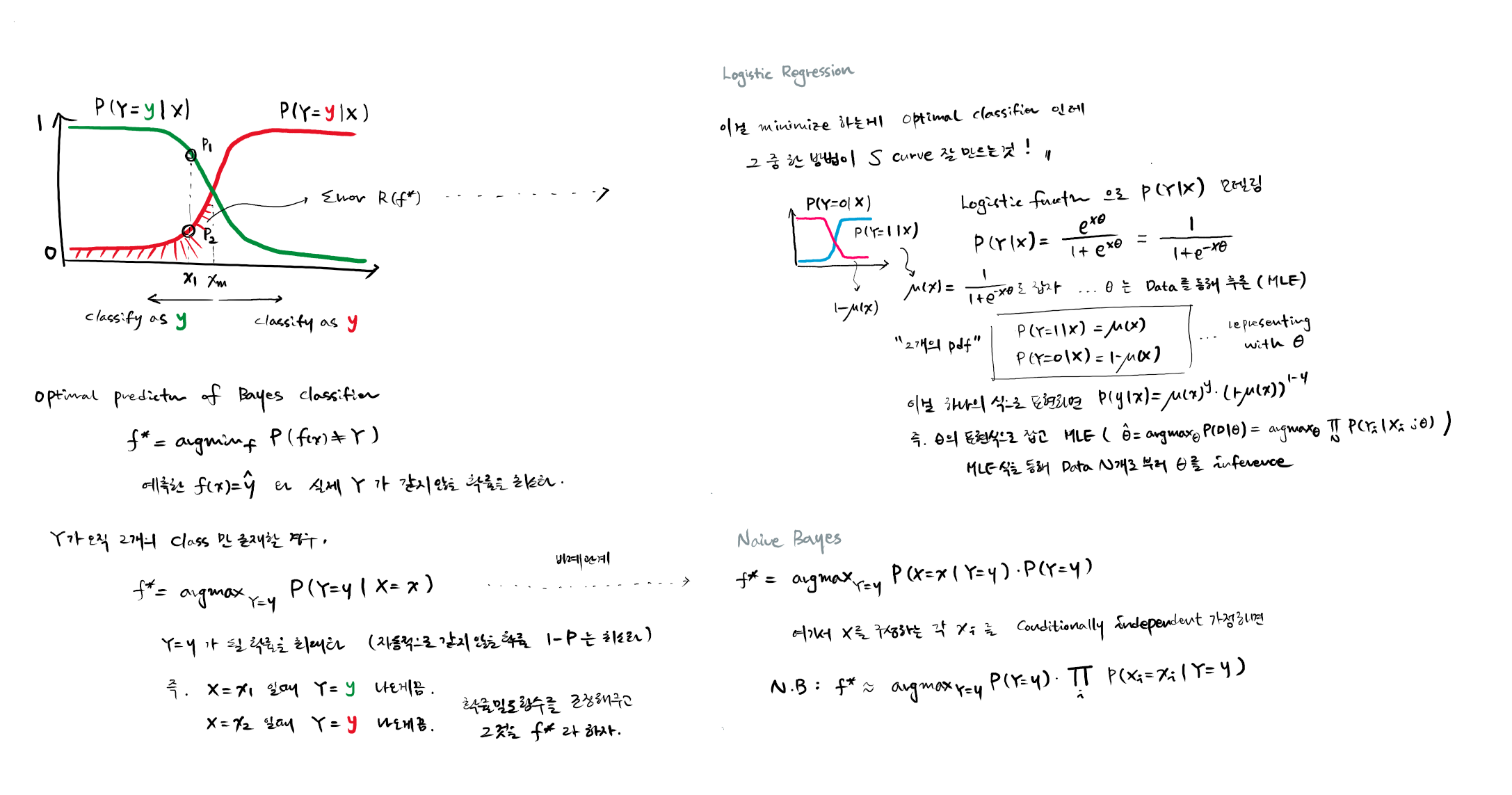

대표적인 Genertavie & Discriminative Pair 관계

지난 시간 배운 Naive Bayes 는 CATEGORICAL input feature 대상

이번에는 CONTINUOUS input feature 대상으로 공부해보자. ... (Logistic Regression 과 Competitive 하도록)

대표적으로 해볼 수 있는 것이 Gaussian Naive Bayes

| Gaussian Naive Bayes

$$ f_{NB}(x) = argmax_{\ Y=y}\ P(Y=y) \cdot \prod_{i}^{d} \ P(X_i=x_i \mid Y=y) $$

where $d$ is # of input features

여기서 $P(X_i=x_i \mid Y=y)$ 가 Gaussian Distribution 을 따른다고 가정해보자.

그리고 Gaussian 분포의 Parameters $\mu,\ \sigma^2$ 을 inference 하는 문제로 생각해보자.

특정 Y class 가 Given 인 상황에서 X 에 대한 평균과 분산은 측정가능할 것

특정 Y class 의 X 는 주어진 평균과 분산의 분포를 따르며,

$X_i$ 는 거기서 Sampling 된 결과물이다라고 생각.

$$ P(X_i \mid Y, \mu, \sigma^2) = \frac{1}{\sigma \sqrt{2\pi}}e^{-\frac{(X_i-\mu)^2}{2\sigma^2}} $$

정리하면 아래와 같이 표현 가능

$$ P(Y=y) \cdot \prod_{i}^{d} \ P(X_i=x_i \mid Y=y) = \pi_k \prod_{i}^{d} \frac{1}{\sigma_k^{\ i}c} \cdot exp(-\frac{1}{2}\left ( \frac{X_i-\mu_{k}^{\ i}}{\sigma_k^{\ i}} \right )^2) $$

where $P(Y=y)=\pi_k$

Gaussian Distribution 대입하고 $\sigma_1^{\ i} = \sigma_2^{\ i}$ 가정하면

Logistic Regression 과 동일한 형태로 유도 가능

$$

\begin{align*}

P(Y=y \mid X) &= \frac{\pi_1 \prod_{i}^{d} \frac{1}{\sigma_1^{\ i}c} \cdot exp(-\frac{1}{2}\left ( \frac{X_i-\mu_{1}^{\ i}}{\sigma_1^{\ i}} \right )^2)}{ \pi_1 \prod_{i}^{d} \frac{1}{\sigma_1^{\ i}c} \cdot exp(-\frac{1}{2}\left ( \frac{X_i-\mu_{1}^{\ i}}{\sigma_1^{\ i}} \right )^2) + \pi_2 \prod_{i}^{d} \frac{1}{\sigma_2^{\ i}c} \cdot exp(-\frac{1}{2}\left ( \frac{X_i-\mu_{2}^{\ i}}{\sigma_2^{\ i}} \right )^2) } \\

&= \frac{1}{1+\frac{\pi_2 \prod_{i}^{d} \frac{1}{\sigma_2^{\ i}c} \cdot exp(-\frac{1}{2}\left ( \frac{X_i-\mu_{2}^{\ i}}{\sigma_2^{\ i}} \right )^2)}{\pi_1 \prod_{i}^{d} \frac{1}{\sigma_1^{\ i}c} \cdot exp(-\frac{1}{2}\left ( \frac{X_i-\mu_{1}^{\ i}}{\sigma_1^{\ i}} \right )^2)}} \\

&= \frac{1}{1+\frac{\pi_2 \prod_{i}^{d} exp(-\frac{1}{2}\left ( \frac{X_i-\mu_{2}^{\ i}}{\sigma_2^{\ i}} \right )^2)}{\pi_1 \prod_{i}^{d} exp(-\frac{1}{2}\left ( \frac{X_i-\mu_{1}^{\ i}}{\sigma_1^{\ i}} \right )^2)}} \\

&= \frac{1}{1+\frac{exp(- \sum_{i=1}^{d} \frac{1}{2}\left ( \frac{X_i-\mu_{2}^{\ i}}{\sigma_2^{\ i}} \right )^2 + log \pi_2)}{exp(- \sum_{i=1}^{d} \frac{1}{2}\left ( \frac{X_i-\mu_{1}^{\ i}}{\sigma_1^{\ i}} \right )^2 + log \pi_1)}} \\

&= \frac{1}{1+ exp(- \sum_{i=1}^{d} \frac{1}{2}\left ( \frac{X_i-\mu_{1}^{\ i}}{\sigma_1^{\ i}} \right )^2 + log \pi_2 + \sum_{i=1}^{d} \frac{1}{2}\left ( \frac{X_i-\mu_{2}^{\ i}}{\sigma_2^{\ i}} \right )^2 - log \pi_1) } \\

&= \frac{1}{1+ exp(-\frac{1}{2\cdot(\sigma_1^{\ i})^2} \sum_{i=1}^{d} \left \{ (X_i-\mu_1^{\ i})^2 - (X_i-\mu_2^{\ i})^2 \right \} +log \pi_2-log \pi_1) } \\

&= \frac{1}{1+ exp(-\frac{1}{2\cdot(\sigma_1^{\ i})^2} \sum_{i=1}^{d} \left \{ 2 (\mu_2^{\ i}-\mu_1^{\ i}) X_i + (\mu_1^{\ i})^2 - (\mu_2^{\ i})^2 \right \} +log \pi_2-log \pi_1) } \\

&= \frac{1}{1+e^{-X\theta}}

\end{align*}

$$

| Naive Bayes v.s. Logistic Regression

Naive Bayes classifier

Assumptions to get this formula

1. Naive Bayes assumption

2. Same variance assumption between classes

3. Gaussian distribution for $P(X \mid Y)$

4. Bernoulli distribution for $P(Y)$

# of parameters: 2 x 2 x d + 1 = 4 d + 1

Logistic Regression

Assumptions to get this formula

1. Fitting to the logistic function

# of parameters: d + 1

Assumptions, # parameters 보면 Logistic Regression 좋다.

하지만 Naive Bayes 는 Prior 정보 가미할 수 있는 부분이 좋다.

| Generative - Discriminative Pair

| Summary

Reference

문일철 교수님 강의

https://www.youtube.com/watch?v=oNTXMgqCv6E&list=PLbhbGI_ppZISMV4tAWHlytBqNq1-lb8bz&index=21

https://www.youtube.com/watch?v=oNTXMgqCv6E&list=PLbhbGI_ppZISMV4tAWHlytBqNq1-lb8bz&index=22

'Study > Lecture - Basic' 카테고리의 다른 글

| W5.L1-9. Support Vector Machine (0) | 2023.05.06 |

|---|---|

| W4.StatQuest. MLE, Gaussian Naive Bayes (0) | 2023.05.06 |

| W4.L1-6. Logistic Regression - Decision Boundary, Gradient Method (0) | 2023.04.30 |

| W3.L3-4. Naive Bayes Classifier - Naive Bayes Classifier (0) | 2023.04.30 |

| W3.L2. Naive Bayes Classifier - Conditional Independence (0) | 2023.04.30 |